Most data structures are designed to hold arbitrary amounts of data. When we talk about their complexity in time and space, we use big O notation, which is only concerned with performance characteristics as n grows arbitrarily large. Understanding how to cast an O(n) problem as O(log n) or even O(1) is certainly valuable, and necessary for much of the work we do at Factual. And yet, most instances of data structures used in non-numerical software are very small. Most lists are tuples of a few entries, and most maps are a few keys representing different facets of related data. These may be elements in a much larger collection, but this still means that the majority of operations we perform are on small instances.

But except in special cases, like 2 or 3-vectors that represent coordinates, it’s rarely practical to specify that a particular tuple or map will always have a certain number of entries. And so our data structures have to straddle both cases, behaving efficiently at all possible sizes. Clojure, however, uses immutable data structures, which means it can do an end run on this problem. Each operation returns a new collection, which means that if we add an element to a small collection, it can return something more suited to hold a large collection.

Clojure already takes advantage of this, by representing maps with eight or fewer elements as a PersistentArrayMap, and everything else as a PersistentHashMap. A PersistentArrayMap is pretty much what it sounds like: it has an Object[16] array that holds keys and values in alternating slots, and for lookups will walk each key and do an equality check. This is less naive than it may seem; over small arrays, linear scans are often faster, both due to simpler code and memory access coherency. For this reason, many quicksort implementations will use insertion sort at the leaves.

A map with fewer than eight elements will just have empty slots:

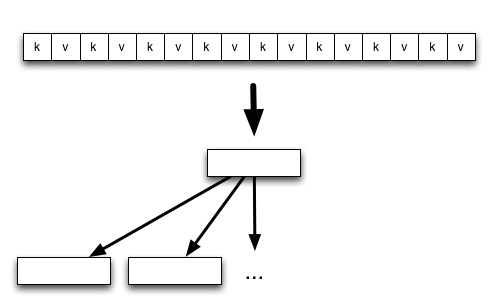

but if an entry is added to a full PersistentArrayMap, it will spill over into a PersistentHashMap, which under the covers is a 32-way tree.

This has a very nice property: we can use approaches which are only optimal at a small size without suffering drawbacks as the collection grows. So nice, in fact, that a year ago I adapted it for vectors in a library called clj-tuple, which implemented separate types for each vector cardinality, up to six elements. The library is discussed in more detail in this talk, but the general idea is that even the most basic operations in a vector require iteration, often via a java.util.Iterator, since much of Clojure tries to provide general implementations for equality, hash calculations, and other similar operations. If we have a type of vector which can only be created by adding an element to an empty vector, we can guarantee there’s only one element, and remove any need for iteration. As we proceed, inductively, to 2-vectors and 3-vectors and so on, we can make sure that all the operations that normally require iteration are unrolled.

In clj-tuple, special vectors were generated via macros for every cardinality up to six, after which it spilled over into a standard Clojure vector. This made certain operations, especially those where a vector was used as a key in a map, significantly faster. It also suggested that while not entirely general, the PersistentArrayMap could be made more specific. When adding or replacing an entry, it must copy the entire array, update the indices, and instantiate a new wrapper. Even when there’s a single entry, it must go through the ceremony of iterating over that entry.

While at Clojure West, I spoke to Rich Hickey, who said that he’d be happy to accept a patch which implemented unrolled maps and vectors, as long as it was in Java. Months later, at a Factual hackathon, I got around to actually attempting to implement this. The result is 1000 lines of Clojure, which generated 5500 lines of Java, which are currently awaiting review to be merged into Clojure.

writing java with clojure

Clojure can call Java functions via JVM bytecode, but there’s no special interop between Clojure and Java source code. So when I started out, I wrote something like this:

(defn class

[{:keys [modifiers implements extends]} name & body]

(str

(->> modifiers (interpose " ") (apply str))

" class " name

(when extends

(str " extends " extends))

(when implements

(str " implements "

(->> implements (interpose ", ") (apply str))))

"{ "

(apply str body)

" }"))

This just concatenates a bunch of strings together into something which is valid Java, albeit a little difficult to read:

> (class

{:modifiers '[abstract]}

'AbstractSingletonVisitor

"Object singleton;")

"abstract class AbstractSingletonVisitor{ Object singleton; }"

This is perfectly valid Java which, with a package header and a proper file name, would be happily compiled by javac. But this workflow leaves a lot to be desired. My string-concatenated Java is bound to have some missing semicolons or other such issues, and when I pass my barely-formatted Java into javac, it will give an error referencing line 1, column 50000. Mapping the compiler error back into the code which generated it will be a chore, to say the least.

This approach would also mean that I could only work with code which can be compiled, which means that the code needs to not only be syntactically valid, but also have proper package and import headers, a surrounding class with all the necessary fields and methods defined, and so on. This is greatly at odds with my typical Clojure implementation pattern, which involves writing small bits of functionality, testing each at the REPL, and building upwards from there. The goal is to quickly invalidate dead ends, and keep yourself moving constantly forward.

For this reason, my first four hours were spent figuring out how to hijack the Java parsing and formatting mechanisms in Eclipse, and use them for my own questionable purposes. The resulting function was only six lines long:

(import

'org.eclipse.jdt.core.formatter.CodeFormatter

'org.eclipse.jdt.internal.formatter.DefaultCodeFormatter

'org.eclipse.jface.text.Document)

(defn format-java [s]

(let [f (DefaultCodeFormatter.)

te (.format f CodeFormatter/K_UNKNOWN s 0 (count s) 0 nil)

d (Document. s)]

(.apply te d)

(.get d)))

(NB: the dependency versions to make this work are hilariously specific)

This function takes a string representing possibly-correct Java, and either returns a pretty-printed version of the Java, or throw an exception if it’s invalid. Unlike javac, this will format Java snippets, not just full class files:

> (format-java "1+2+3")

"1 + 2 + 3"

> (println

(format-java

"private void sayHello(){ System.out.println(\"hello\"); }"))

private void sayHello() {

System.out.println("hello");

}

With this, I could begin to build up my data structure implementations piecemeal, testing them each step of the way. If an input threw an exception, I could narrow the input until I hit upon exactly what was causing the issue.

This didn’t catch even simple semantic issues, like misspelling the name of a method, which meant that I still needed to rely on the compiler to validate the final code. However, since the input to the compiler was now well-formatted, it was much easier to map the Java code back onto the Clojure which had generated it.

implementing persistent vectors

Clojure data structures serve two masters: the clojure.lang.* interfaces that are used by Clojure functions, and the java.util.* interfaces that allow Clojure’s data structures to be consumed by normal Java libraries. This means that many methods they have to implement are redundant, like size() on java.util.Collection and count() on clojure.lang.Counted.

This makes implementing collections that behave like Clojure’s base collections fairly tedious, and easy to screw up - if you forget to implement size() everything will work fine in Clojure, but fail months later when you pass it into a Java library. There are libraries which try to make this easier, but it remains a fundamental pain point for Clojure developers trying to venture off the beaten path. Clojure’s own implementation uses abstract classes to hide away most of the boiler plate, but Clojure has poor interop with abstract classes.

Luckily, we’re writing Java ourselves, so we can just use the abstract classes directly. Even so, in order to implement something that acts like Clojure’s persistent vectors, we need to implement all of the following:

// returns the metadata on the object

public IPersistentMap meta()

// returns a new collection with the provided metadata

clojure.lang.IObj withMeta(IPersistentMap meta)

// returns the nth element, throwing an exception if the index is out of bounds

Object nth(int i)

// like nth, but returns 'notFound' if the index is out of bounds

Object nth(int i, Object notFound)

// returns the size of the vector

int count()

// returns an empty instance of the vector

clojure.lang.IPersistentVector empty()

// returns a new collection with 'val' at the given index

clojure.lang.IPersistentVector assocN(int i, Object val)

// returns a new collection with 'val' appended

clojure.lang.IPersistentVector cons(Object val)

// returns a new collection without the last element

clojure.lang.IPersistentVector pop()

// reduces over the collection, passing in both the index and value

Object kvreduce(IFn f, Object init)

// reduces over the collection, passing in just the value

Object reduce(IFn f, Object init)

// like 'reduce', but uses the first element as the 'init' value

Object reduce(IFn f)

// returns the JVM-style hash of this collection

int hashCode()

// returns the Clojure-style hash of this collection

int hasheq()

// returns the result of a JVM-style equality check

boolean equals(Object o)

// returns the result of a Clojure-style equality check

boolean equiv(Object o)

// returns the vector in array form

Object[] toArray()

// returns an iterator over the vector

java.util.Iterator iterator()

Notice that since Clojure uses its own equality semantics, mostly to allow BigIntegers to be equal to their corresponding primitive numbers, it must implement its own hash code and equality methods. Clojure also recently began using a more expensive hashing scheme to minimize hash collisions.

Most of these methods are trivial to implement if we know our size in advance, and simply have a field per element. Let’s consider a 2-vector, represented by the fields e0 and e1:

int count() {

return 2;

}

Object nth(int i, Object notFound) {

switch (i) {

0: return e0;

1: return e1;

default: return notFound;

}

}

int hashCode() {

int hash = 1;

hash = (31 * hash) + (e0 == null ? 0 : e0.hashCode());

hash = (31 * hash) + (e1 == null ? 0 : e1.hashCode());

return hash;

}

It’s easy to see how these can be programmatically generated for vectors of arbitrary size, and how the assembly generated for these functions will be extremely straightforward. In the hashCode() implementation, especially, where each element only requires a multiplication and addition (in addition to its own hashCode() call), the overhead of iterating over the collection can easily dwarf the cost of actually calculating the hash.

A less obvious method which benefits from unrolling is reduce:

Object reduce(IFn f, Object init) {

init = f.invoke(init, e0);

if (RT.isReduced(init)) {

return ((IDeref) init).deref();

}

init = f.invoke(init, e1);

if (RT.isReduced(init)) {

return ((IDeref) init).deref();

}

return init;

}

In Clojure, reduction is the underlying mechanism for eager sequence processing. By optimizing this, we transitively optimize most operations over the data structure.

There are definitely diminishing returns on unwinding these sorts of loops. In a language like C, we’d have to balance the benefit of keeping our instructions small and able to fit in cache against the overhead of iteration (which is often vanishing small with for loops on modern hardware). On the JVM, the cost of iterating with a java.util.Iterator can be significantly higher, but so too can the cost of unrolling. The JVM will only apply certain optimizations to functions that are smaller than a certain number of bytecode instructions. Past that, there is a size threshold at which it ceases to try to optimize the function at all.

This means that for smaller vectors we can safely unroll these collections, but past some point it makes sense to spill over into a more typical Clojure persistent vector. Based on some simple benchmarks I decided to set the magic threshold at six elements for both the vectors and maps, but a more exhaustive analysis may be useful here.

An interesting facet of 2-vectors is that clojure.lang.MapEntry implements both clojure.lang.IPersistentVector and java.util.Map.Entry, which means that we can treat a map as a sequence of 2-vectors. We cannot, however, simply add any 2-vector we have onto a map, since it expects a Map.Entry. Obviously we can’t have Clojure’s PersistentVector implement Map.Entry, since this is only valid when it has two elements, but in our unrolled implementation we have an entire class dedicated to the two element case, we can ensure that all 2-vectors can be conj’ed onto a map.

implementing persistent maps

Clojure’s persistent maps have a similar panoply of functions that need to be implemented, and most can be implemented as simply as they can for the vectors. Unfortunately, when doing a lookup on a map we cannot simply jump to the correct entry, we have to scan over all entries and see if there’s a matching key.

private int indexOf(int h, Object key) {

if (key instanceof Keyword) {

if (k0 == key) {

return 0;

} else if (k1 == key) {

return 1;

}

return -1;

}

return indexOfObj(h, key);

}

Notice we first check if the key is a clojure.lang.Keyword, which is typical for Clojure’s maps. Keywords have the nice property that there’s only ever a single instance of a keyword for a given string, which means that when checking if two keywords are equivalent, we only need to check if they’re the same object. This same optimization is already used in Clojure’s existing PersistentArrayMap.

However, if the key is not a keyword, we have to fail over to a more generic lookup:

private int indexOfObj(int h, Object key) {

Util.EquivPred ep = Util.equivPred(key);

if (h0 == h && ep.equiv(key, k0)) {

return 0;

} else if (h1 == h && ep.equiv(key, k1)) {

return 1;

}

return -1;

}

Notice that at each step, before doing a generic equivalence check we first compare their hashes. Unlike PersistentArrayMap, in addition to the keys and values the PersistentUnrolledMap also stores the hash code. This is for a very simple reason: most equivalence checks we do will be false. In the case where we’re constructing a map (i.e. adding new entries), all of our checks will be false. In the case where we’re looking up a value, depending on the key’s location we may need to scan all the other entries before arriving at it.

Clojure’s equivalence checks are relatively simple:

static public boolean equiv(Object k1, Object k2) {

if (k1 == k2) return true;

if (k1 != null) {

if (k1 instanceof Number && k2 instanceof Number) {

return Numbers.equal((Number) k1, (Number) k2);

} else if (k1 instanceof IPersistentCollection

|| k2 instanceof IPersistentCollection) {

return pcequiv(k1, k2);

}

return k1.equals(k2);

}

return false;

}

First it checks if the two objects are identical, and then checks whether we need to use the special equivalence checks for numbers or collections, and otherwise falls through to the base Java equals() check. However, this is enough overhead that calls to equiv() are typically not inlined, especially when we’re doing many of them. Avoiding this entire stack of calls by doing a single integer comparison can be a significant gain.

This is at the cost of storing, rather than recomputing, the 32-bit hashes of each key. But hashes are also stored in a PersistentHashMap, so it’s a very reasonable tradeoff.

implementing transient collections

Updating an unrolled collection is relatively inexpensive, since we don’t need to copy any underlying arrays, just directly instantiate a new instance:

public IPersistentVector assocN(int i, Object val) {

switch (i) {

case 0: return new Card2(meta, val, e1);

case 1: return new Card2(meta, e0, val);

case 2: return cons(val);

default: throw new IndexOutOfBoundsException();

}

}

(Note that Card2 is the classname of our 2-tuple vector implementation)

Still, no matter how cheap allocations are, it’s still preferable to not make any. Clojure’s transient collections allow us to do in-place updates on collections in order to quickly construct them, and our unrolled collections also need a transient form. This ends up being a little strange, though, because this means we no longer have a 1-to-1 relationship between how many fields we have and how much data we contain. Instead we have a single transient vector which has room for six elements, and an empty transient map needs fields that can store six keys, values, and hashes.

This means that we can’t simply unroll our loops anymore - we only want to iterate over elements which have values. If we were iterating over an array, this would be easy:

for (int i = 0; i < count; i++) {

...

}

But since we don’t have an array, the obvious solution is a little ugly:

if (count > 0) {

...

}

if (count > 1) {

...

}

...

This creates a lot of instruction branches, and for smaller collections forces us to make multiple redundant checks (“Is 0 > 0? No? Well, is 0 > 1?”). We can short circuit these checks like so:

if (count > 0) {

...

} else {

return ...;

}

if (count > 1) {

...

} else {

return ...;

}

...

But this makes our code even more noisy, and the resulting bytecode even larger. Luckily, we have a solution in the form of Duff’s device, which uses the case fall-through behavior in switch to great advantage:

private int indexOf(int h, Object key) {

if (key instanceof Keyword) {

switch (6 - count) {

case 0:

if (k5 == key) {

return 5;

}

case 1:

if (k4 == key) {

return 4;

}

case 2:

if (k3 == key) {

return 3;

}

case 3:

if (k2 == key) {

return 2;

}

case 4:

if (k1 == key) {

return 1;

}

case 5:

if (k0 == key) {

return 0;

}

default:

return -1;

}

}

return indexOfObj(h, key);

}

Here we see the same key lookup as above, but wrapped in a switch, which will jump to a particular if clause, depending on the current count. If count is 1, we jump to case 5, and will only compare k0 before falling through to the default case. If count is 2, we jump to case 4, which means we’ll compare k1 and k0 before falling through to the default case, and so on. This means that instead of having to worry about bounds checking and short-circuiting, we simply jump over all the keys we don’t care about, and proceed from there.

Testing the Collections

So, at the end of this exercise we have more than 5000 lines of Java, and we want to add them to the core implementation of Clojure. Ideally, we won’t introduce any bugs in the process. But the same unrolling that makes the code faster makes it significantly harder to simply read the code and verify it’s “correct”. The Clojure code which generates the Java, while more compact, is mostly concerned with string concatenating its way to proper syntax. The semantics of both codebases are a bit opaque.

But even if the code were perfectly clear, data structures are easy to get wrong and difficult to test. On a map, boundary conditions that need to be tested include key collisions, hash collisions, removing non-existent keys, conversions to and from transient collisions, and so on. The exact boundary conditions depend on the underlying implementation, so a test suite that covers one implementation of a vector or map won’t necessarily cover another, even if they behave identically.

Given this, it seems like writing exhaustive unit tests is a poor way for us to make sure our implementation is sound. A better approach would be to use property-based testing, which instead of defining both inputs and expected behavior, allows us to just define invariants which must hold true across all inputs. The testing framework will then search through the space of inputs for one which breaks the invariant, and then reduce the input down to the simplest possible reproducing case.

This is an especially nice approach for us, since both the inputs and invariants are straightforward. The inputs are all possible actions that can be performed on the data structure (conj, disj, assoc, dissoc, etc.), and the invariant is that it must behave just like the existing implementation, no matter what actions we take. Luckily there’s a readymade library for this purpose: collection-check. This library has been used to validate most (possibly all) of the alternate data structures in the Clojure ecosystem. It also uncovered an bug in Clojure’s own implementation of transient maps, which is discussed in more detail in this talk.

But while collection-check is a useful tool for validating the unrolled collections, we still need to map it effectively onto the underlying implementation. The initial tests for the collections only checked maps of integers onto integers, which skipped over the special code paths dedicated to keywords. When an additional test was run for maps of keywords onto integers, an off-by-one error in the Duff’s device snippet discussed above was found.

bringing it all together

The results of this work can be found in the cambrian-collections library, the output of which has been submitted as a patch for Clojure, and is currently under review to be merged into the Clojure 1.8.0 release. Initial performance tests are promising: the cost of building collections when deserializing JSON in Cheshire is halved with unrolled collections, giving us a 33% overall improvement in performance. Since at Factual we spend quite a bit of time deserializing data from disk, this will have a meaningful and immediate impact on our daily operations. We hope it will have a similar benefit for others.

This post was originally on the Factual blog, which has since been taken down post-acquisition.